I design diffusion language models for parallel, flexible-order text generation. My PhD research explores novel diffusion language modeling paradigms and architectures to enhance their

quality, generation speed, and training efficiency. Notably, I led the development of Block Diffusion, which was featured as an oral at ICLR 2025.

I am interning at NVIDIA Research with Pavlo Molchanov and have previously interned at Runway AI with Anastasis Germanidis. My CV is available here.

Selected Works

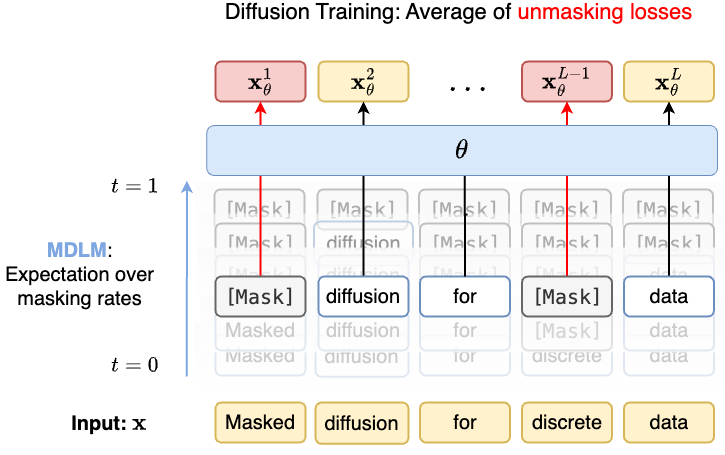

Arriola, M., Gokaslan, A., Chiu, J. T., Yang, Z., Qi, Z., Han, J., Sahoo, S. S., Kuleshov, V. Block Diffusion: Interpolating Between Autoregressive and Diffusion Language Models. ICLR 2025 (Oral, Top 1.77%). [Paper] [Blog] [Code] [Slides]

Arriola, M.*, Schiff, Y.*, Phung, H., Gokaslan, A., Kuleshov, V. Encoder-Decoder Block Diffusion Language Models for Efficient Training and Inference. NeurIPS 2025. [Paper] [Blog] [Code] [Slides]

Arriola, M., Venkat, N., Granskog, J., Germanidis, A. Adapting Autoregressive Vision Language Models for Parallel Diffusion Decoding. Runway Research Blog. [Blog]

News

- Feb-26: I gave a talk at Meta FAIR on E2D2.

- Jan-26: I advanced to PhD candidacy! I will also be starting an internship at NVIDIA Research!

- Oct-25: Encoder-decoder diffusion LMs paper accepted to NeurIPS 2025. I was also recognized as a Top Reviewer!

- Jun-25: Started a summer internship at Runway in NYC!

- Apr‑25: Presenting Block Diffusion as an oral at ICLR 2025, main track.

- Apr‑25: Invited talk at Amazon AGI on Block Diffusion.